With the baseprompt and game logic in place, and the Anthropic and Google APIs working nicely (see Cleanerbot Rescue, part 1- using AI to code and simplify game logic in a text adventure) I was ready to make the game more accessible and put it online. This article talks about some of the challenges faced, with links to the web version AI-driven game, and once you’ve finished playing, its sourcecode on GitHub.

I decided to build it using Flask because that is what I know best and host it in the Google Cloud App Engine because a) they keep bugging me about free credits I can use, and b) they seemed the easiest way to get a Flask server up without having to use Docker. I’ll learn to use it one day, but the learning curve on this project was already steep enough.

I’ve already built a bunch of flask sites but it saved a lot of time to have ChatGPT set up the basic template of an index.html file as the front end which talks to a main.py back end. With a version of the game already working in a local venv most of the work fell into 4 buckets:

- Creating a web front end

- Splitting functionality between back end and front end

- Adding save / load functionality

- Fine-tuning the APIs – Claude gets a bit spooky

- Creating my first Google Cloud environment

1. Creating a web front end

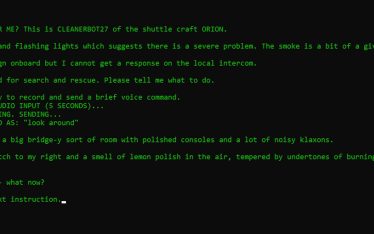

I took a few shortcuts here because I didn’t want to spend too much time obsessing over the minutae of look and feel. I got lucky and came across this tutorial from Chris Coyier after a quick web search to present the text of my text adventure in a kind of pseudo-terminal. I really like the blurry ‘glow’ of the text, but my focus group found it distracting so that was an easy fix.

Splitting the screen into header, main and footer was handled by a ChatGPT request and making it responsive was handled by another. Fine-tuning of positions was a combination of me fiddling around and occasionally sending screenshots to ChatGPT.

Over time I generated footers for different circumstances beyond the default: for loading previous games, for timed out sessions (remove the input UI and ask the player to reload the page) and a similar one which thanks the user for playing and replaces the game UI with social sharing buttons.

ChatGPT also came in handy for presenting a series of messages next to the pressed button (Recording, Sending, Suspense Builds and Decoding) to distract and reassure the player when it’s taking a long time to generate a response. I just described what I wanted, the timing of the sequence and how it should stop as soon as a response is received and displayed to the user. A fiddly bit of javascript which I didn’t have to think too hard about.

The final front end change was the addition of a WTF (“What’s This For?”) button which points at this tutorial.

2. Splitting functionality between back end and front end

I wish I thought of all these things at the start, but trial and error helped me figure things out.

The main issue was audio recording. It was ok for users on their laptops and desktops to click the [SEND COMMAND] button and start the flow which records their voice command, transcribes it to text and sends it to Claude. Mobile users had more of a problem as the CODEC I used for this wasn’t available to them. A simple IF statement in the front end to find one which works, combined with a tweak to the backend to handle multiple input types sorted that out. This is the kind of thing where ChatGPT came into its own. I asked it to select the most commonly used CODECs for mobile phones and rewrite the snippets in the two files accordingly, making sure that these inputs were compatible the Google Speech API I was using.

3. Adding save / load functionality

In a text adventure game it’s crucial to have this feature and it’s handy for debugging if I don’t have to start from square one every time. I wanted to avoid having to ask players for login information so I couldn’t use something like Firebase to store player data. Instead, when the player gives the command ‘save game’ the flask file stores all their game variables (location, inventory etc) and saves them to a CSV along with a random 3-word code phrase. Consequently, any player who chooses to ‘load game’ and then submits the 3-word code phrase gets teleported to that point in the game.

It worked so well that I retrospectively added it to the original local version of Cleanerbot Rescue.

The trickiest part was generating the code-phrases. The app picks a random word from each of 3 text files but first I had to generate the word lists 300 or so words in each list will give around 27 million unique combinations which is plenty. I wrote a pretty elaborate prompt asking both ChatGPT and Claude to write three lists containing at total of 1,000 common verb adjectives, avoiding any homophones, proper nouns, or words of more than three syllables. They both did ok on the wordcount, but the wordlists weren’t exactly what I wanted. In the end I just had ChatGPT give me a list of 1,500 common words and then I manually scrolled through them cutting out anything which would cause confusion.

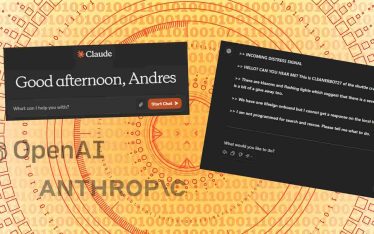

4. Fine-tuning the APIs – Claude gets a bit spooky

With Anthropic’s Claude processing player intent through the baseprompt I tinkered with using different models to act as the brain of the game engine. I started with their largest LLM at the time, Opus, and then moved down to Sonnet and ended up using Haiku. Haiku is their smallest model, and once I was satisfied that it could apply the instructions in the baseprompt to the wide range of player inputs (there are lots of ways to tell a Cleanerbot to search a room and tell you what it can see) I was happy to switch to using it for two reasons: it’s size means faster responses, and it’s cheaper to use.

Switching between LLMs meant I did come across one weird behaviour. The baseprompt instructs Claude to take the player’s input and interpret the player’s intention and return a 4 digit CommandCode. That code is then used by the app’s game logic to show the player what happens next. So all I want is that ComandCode, but after a while I found that the Claude was adding extra content to its responses. For example, if a player asks Cleanerbot to tell us about itself Claude should return ‘Command understood: 0022’. Instead it gave:

Command understood: 0022 I am the Cleanerbot, also known as the Cleaner bot or Cleanerbot27. My purpose is to assist the crew of the Orion spacecraft by performing cleaning and maintenance tasks as needed. I can search, explore, move around the ship, open and close access panels, clean and polish surfaces, and provide information about the ship and its systems. Please let me know if there is anything specific I can help with.

Not only was this excessive (the game logic just searches the response for ‘0022’) it was a bit spooky because Claude had put together this information about its purpose and capabilities from its understanding of the baseprompt. I hadn’t explicitly given it this description. Even though I was delighted by this, I updated the baseprompt to eliminate this kind of output: it’s consumed by the game engine, not the player, and the game engine only wants the CommandCode. Saved me a few tokens and some processing time too.

The other change was moving the text to speech functionality to support (spoiler alert) the character called OSCAR speaking to the player. I’ve left a lot of the code in the version of index.html which you’ll find on GitHub in case you can get it working but after a lot of experimentation with different models there was still too much latency between his words appearing on the player’s screen and his voice reading them aloud. Worked fine in the local version which used pyttsx3 to generate speech instantly, but the online version needed to use Google’s text to speech API and it just didn’t sync. Your experience might be different, and if you come up with a fix, let me know because players are disproportionately delighted when they hear the game talking back to them.

5. Creating my first Google Cloud environment

This was my first time using Google Cloud and it was pretty straightforward. In fact the only real problem I had was because I got caught in a bugfix loop with ChatGPT until common sense pulled me out. See my earlier post about how to avoid mistakes with an AI as your coding copilot.

As the online version has to deal with multiple simultaneous players, I needed to introduce session handling. I reckoned the best way to do this was to create a unique session file as soon as the index.html file loads, containing game variables (location, inventory etc) for the backend to maintain while having the webpage use the same file to read the game logic’s responses to the player’s latest command. I told ChatGPT what I wanted and we made the updates with minimal trial and error.

One peculiarity which it didn’t warn me about until things broke was that these session files couldn’t be written to the same environment as the flask code. So while the flask and HTML sat in Google App Engine, the session files were created, written, read and deleted in a separate Google Cloud Storage bucket. No big deal but it was annoying to realise that my helpful team mate wasn’t smart enough to give me a heads up.

That wasn’t the main problem though. Uploading my Python environment to Google App Engine kept failing and figuring out why is what tipped ChatGPT into a spiral. Initially, I was using PyAudio to handle audio input before passing it to the Google Speech API. The thing is, App Engine kept tripping over its installation. After figuring that bit out (I asked ChatGPT to parse the install logs) it told me that App Engine does not support PyAudio -but that I could get it working by including an additional library in the install. That was the hallucination which led the spiral: try this, try that, update that code…

Eventually I (got some sleep and) realised what the problem was. What does PyAudio do? It takes the user’s audio input and passes it to the Google Speech API for transcription. And in that first step it accesses the microphone on the user’s device. Which is fine when you’re running code on a laptop, but notso helpful when the device is in a distant server farm. So the way to get out of the spiral wasn’t to figure out how to make App Engine install PyAudio, but to use something completely different.

Just as I described in my original Cleanerbot Rescue post about how to avoid mistakes with an AI as your coding assistant, even if you mitigate the potential problems, even with the most detailed of prompts, there’s still the fact that your AI coding assistant doesn’t know everything and the main architect role is still, for now, with the human.