One of the things that drove me crazy about text adventure games when I was a kid was intent. Matching what I want my character to do to however the developer thought they should describe it: “Get the book” vs “Take the book” vs “Grab the book” vs “Pick up the book“.

Just like the challenges we face when working with other AI-powered apps, either the developer has to come up with every way a player can describe an action, or the player has to figure out what worked. Can cheap, fast, Natural Language Processing help fix that? Could I find out without building just another chatbot?

Well, yeah. But stick around and I’ll explain how I did it, the challenges along the way, and the source code of my first AI-powered text adventure game.

The first step was to come up with a scenario which would fit my limitations as a developer, the technology and budget. So I started small with a game played entirely within the command line which meant I could build something that just ran locally. I cover putting the game online in part 2 of this series.

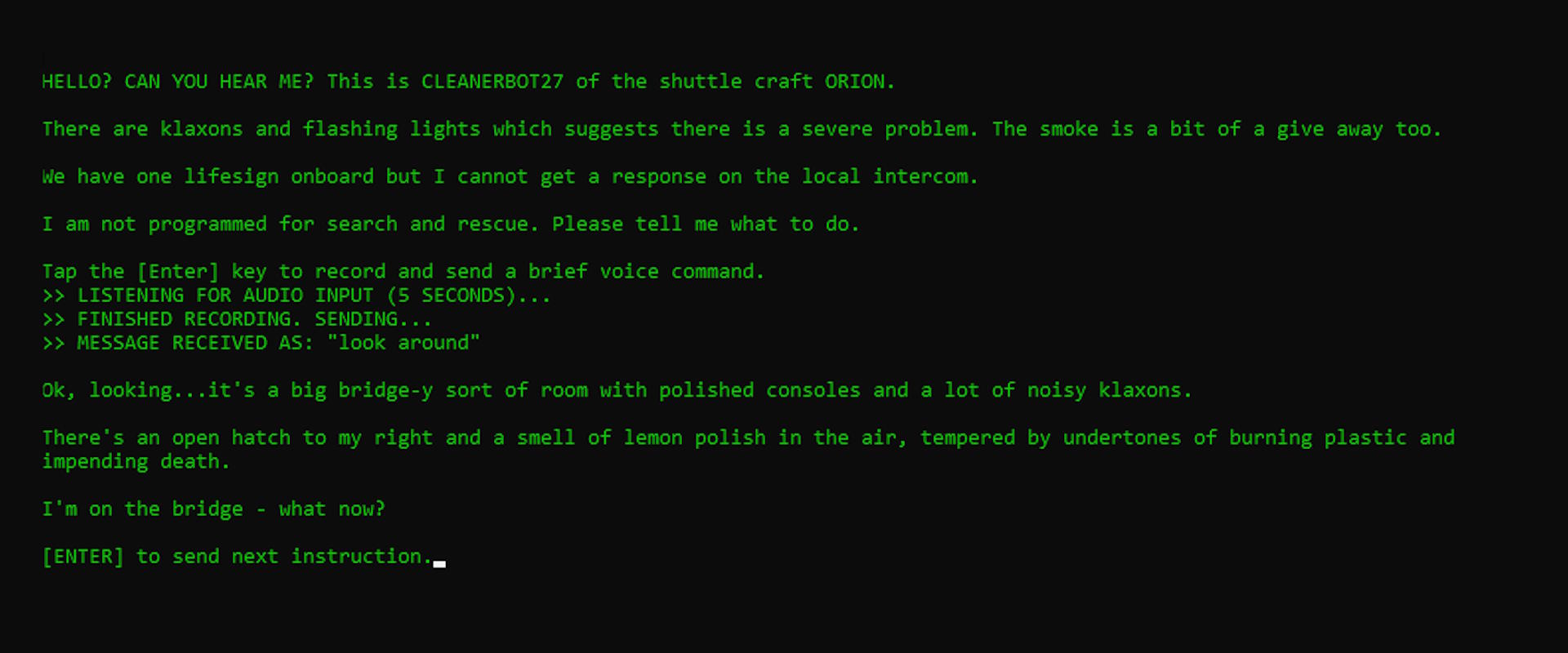

I created a scenario where you (the player) are communicating remotely with a distant Cleanerbot, directing it on a search and rescue mission on a burning spaceship to excuse any latency between input and response. Hey, if you want a real narrative designer, talk to this guy. I knew latency was going to be an issue because not only was I going to use an API to determine player intent, I was also going to have the player speak rather than type their inputs. This is a 21st century text adventure after all.

And like most people these days I planned to use AI for as much of the programmatic heavy-lifting as possible. Mixed results there -but I give tips on how to work with an AI coding assistant later in this post.

Technology and workflow

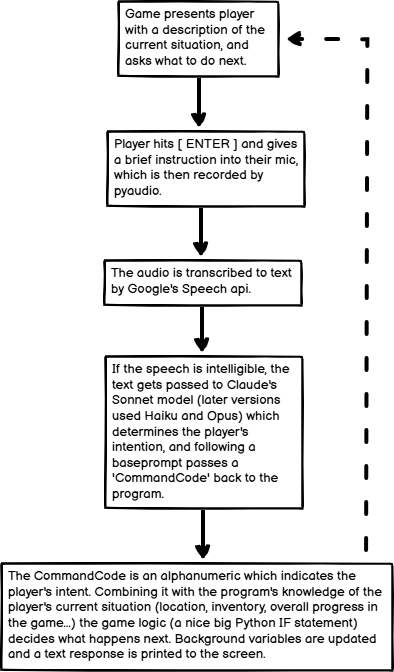

So to recap: the game puts some text on the screen (“You’re in the ready room”), the player sends the command “Tell me what you can see” to Cleanerbot, the Google api translates that from speech to text (with error handling to cope with empty messages) and sends it to Claude.

Claude compares the player’s input to see if it aligns with any of the commands in the baseprompt and reckons it’s equivalent to ‘search‘ which is associated with CommandCode 0000. The game logic knows the player’s in the Ready Room and now Claude’s response shows that the player has told Cleanerbot to look around.

elif location == "readyroom" and "0000" in claude_response_text and seenreadyroom == False:

print('Ok, looking...this is where the crew usually sleeps and hangs out on the longer runs. Nobody\'s here.\n\nThere are bunks by the wall, some chairs, and a table with a book on it. You know, crew stuff. I\'m not normally allowed in here, and going by the state of the furniture, it shows.\n\nThere\'s the open hatchway leading to the bridge, and a closed hatch opposite. There also seems to be a closed access panel in the floor.\n')

seenreadyroom = True

seenpanel = True

nextaction() So combining that with the fact that the Cleanerbot hasn’t seen the Ready Room, the game logic gives a description to the player and then nextaction() reminds the user where the Cleanerbot is and asks for the next instruction.

The baseprompt is crucial

Later in the game, as well as text feedback, the player finds another character talking to them (through text to speech via pyttsx3) but the main thing is the way in which a baseprompt manages to detect intent. The flexibility I get from being able to interpret all kinds of player intent saves me from having to add hundreds of rows of IF statement logic.

In the next version of the game, which is hosted on the web, I separated the baseprompt into a separate file. You can read it in full here (contains spoilers) but in brief:

- the intro explains that I want Claude to turn the player’s input into CommandCodes; then

- defines similes specific to the game (eg ‘escape pod’ can be called ‘the pod’); then

- lists player intents and their corresponding CommandCodes; then

- housekeeping to reinforce how Claude should respond, specifically the formatting of the output.

Tips on working with an AI coding assistant

I’ve been working with computers since my teens, but I wouldn’t call myself a coder as much as someone who works with them. I can get by in Python but a lot of the time I found myself looking for help, a copilot if you will, from ChatGPT and Claude. Overall (this is May/Jun 2024) I found ChatGPT 4o to be the most helpful, able to examine hundreds of lines of code whereas Claude would confidently mansplain complete nonsense when asked anything beyond a simple W3 Schools tutorial.

At one point Claude even made up complete nonsense about how to configure its own API, citing documentation which didn’t exist and answering follow-up questions by talking about ‘new’ functionality which couldn’t possibly work. So for coding I mainly worked with ChatGPT. Perhaps I’d have got a better result if I had used the GitHub’s copilot product instead of sending my queries through the chat portal, but I doubt it. Both are powered by OpenAI, after all.

My problems with ChatGPT fell into three categories:

1. Only reading the first bit of a file and then hallucinating the rest

Fix 1: never giving it a big python file as an attachment, but instead pasting hundreds of lines of code at the end of a prompt being very specific about what I do, and do not, want it to do.

Fix 2: warning the AI that I need it to disregard any similar files it might have seen in the past, and to parse this new one thoroughly because (treating it like a 4 year old) before I ask it to help me with any coding issue, I will be giving it a brief quiz (eg what are the possible values for the ‘location’ variable?) to verify its familiarity with the code. So it should read the file pasted below and tell me when it’s ready for your first quiz question.

Fix 3: temporarily deleting hundreds of lines of game logic and using comments in the code to explain to the ChatGPT that the missing functionality isn’t relevant to the task and has been removed for easier file handling. This saved it having to waste tokens reading lots of very similar content which was mostly text to show the player wrapped in pretty simple logic.

2. Missing stuff out

As well as making stuff up with hallucinations, it would sometimes omit things when refactoring the code I provided. Difficult to spot that a function has vanished or a variable has been forgotten when looking at hundreds of lines of code.

Fix 1: never asking it to rewrite an entire file. In fact making sure that any prompt I gave specifically forbidding it from trying to do so and instead, asking it to justify changes in the form of ‘before’ and ‘after’ code snippets. That way there’s less for me to compare when I’m verifying the update. The downside is that this might mean a lot of copy-paste rather than trusting ChatGPT to handle the change.

Fix 2: explicitly forbidding it from ‘missing out’ content which is commented out. I’d often find that functions which I’d temporarily commented out for whatever reason were being left out of any updates returned by ChatGPT. Even when it acknowledged this specification, it was still 50/50 whether it would follow it. Stay on your toes.

3. Getting trapped in a loop

If I went to ChatGPT with a problem, 80% of the time it would come back with a workable, plausible, route to a solution. Perhaps that was because toward the end I was getting lazy and asking it to correct the syntax of my pseudocode (or buggy code) rather than just focus on the gnarly stuff.

But sometimes the solution just didn’t emerge, finding that it wasn’t (we weren’t?) able to find a solution despite multiple rounds of code updates, I’d scroll back through the conversation and closely examine what it was changing.

It’s easy to spot when it’s asking you to change a line of code and then next time tells you to put it back again, or if a change is not much more than adding and subtracting comments rather than altering functionality. It’s more of an arse to realise that it has had you updating 3 interconnecting functions and the combinations are, when you step back, just 5 different ways of trying the same thing.

Fix 1: vigilence. And when that fails, the sense at 3am in the morning that you’re making a lot of changes but the thing you want isn’t working yet. It doesn’t solve the problem, but realising that you and your copilot are spiraling helps end the nonsense.

Fix 2: come at it from a completely different angle. Or even RTFM. Perhaps the reason why you can’t configure an API to work with a particular library isn’t because of syntax but because it’s simply not meant to work that way. So tell the AI that you’re scrapping that approach and you want to use something else instead. If you’re about to ask it to suggest that something else, be very wary.

Remember that it doesn’t actually care and doesn’t actually know everything

Even if you catch it in a flat out lie, it can and will only apologise. Profanity helps. It can be cathartic and it does seem effective in getting the bot to stop wasting i/o on transparent apologies and pledges to ‘…adopt a more focused approach’.

It is, at its core, a really sophisticated auto-complete engine so always sanity check its suggestions. Not just the syntax of the responses, or the random bits of added / subtracted code, but be ready to challenge suggestions. “No, I would prefer a solution which involves less refactoring”, “Are you sure this is the best approach for an app with multiple simultaneous users?”, “What are the drawbacks of this solution?”…

I wouldn’t have completed, or attempted, the project without the AI’s help, but I also know that working with any of my developer colleagues or booking a remote freelancer, it would probably have taken much less time -if the budget was there.

Go ahead and play with the GitHub codebase for yourself here or take a look at the online version.

Just as I found that my experience writing specific JIRA tickets for features and acceptance criteria made it easy to package tasks for an AI, I know that working with remote freelancers where language, distance and a lack of personal engagement can sometimes be a problem also helped me with the quality of my prompt definitions.